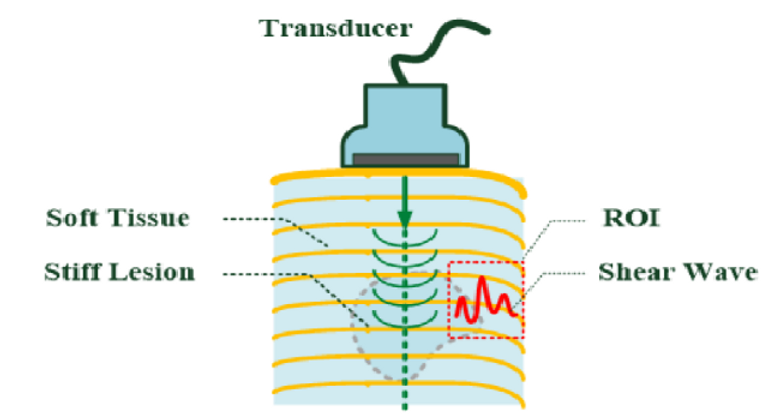

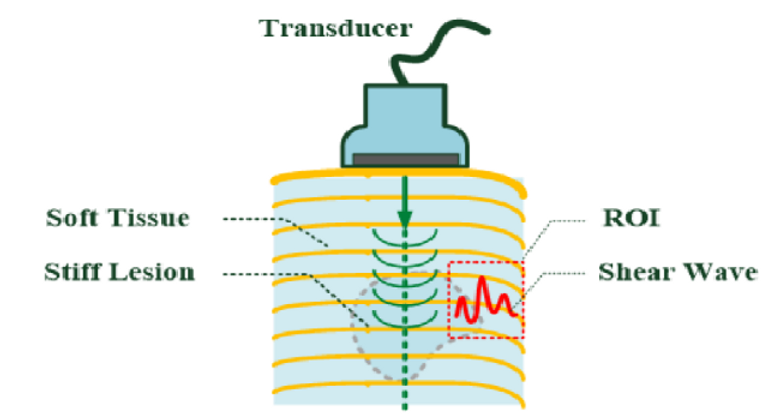

Breast cancer is the most common cancer among women in Taiwan, and the number of breast cancer cases reported annually continues to increase. In 2018, breast cancer ranked fourth in terms of mortality. Early stages (stages 0–2) of malignant breast lesions can be diagnosed during regular screening, and early treatment via advanced medical therapies increases survival rates. Ultrasound imaging, including acoustic radiation force impulse (ARFI) imaging, is the first-line examination technique used to locate breast lesion tissue, which can then be quantitated by virtual touch tissue imaging (VTI). ARFI-VTI elastography is a breast imaging modality that creates two-dimensional (2D) images to visualize the texture details, elasticity, and morphological features of a region of interest (ROI). The 2D Harris corner convolution is applied during digital imaging to remove speckle noise and enhance the ARFI-VTI images for extrapolation of lesion tissue in a ROI. Then, 2D Harris corner convolution, maximum pooling, and random decision forests (RDF) are integrated into a machine vision classifier to screen subjects with benign or malignant tumors. A total of 320 ARFI-VTI images were collected for experiments. In training stages, 122 images were randomly selected to train the RDF-based classifiers and the remaining images were randomly selected for performance evaluation via cross-validation in recalling stages. In a 10-fold cross-validation, promising results with mean sensitivity, mean specificity, and mean accuracy of 86.02%, 87.63%, and 86.97%, respectively, are achieved for quantifying the performance of the proposed classifier. Breast tumors visualized on ARFI-VTI images can be used for rapid screening of malignant or benign lesions by using the proposed machine vision classifier.

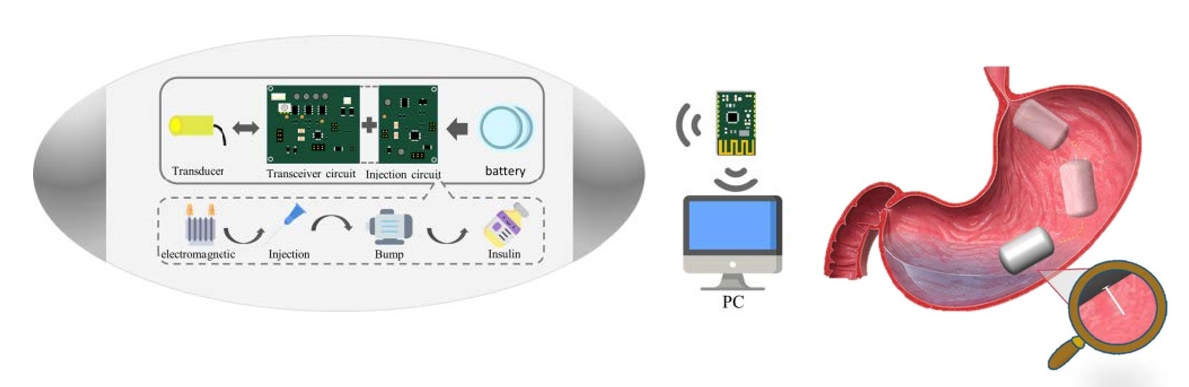

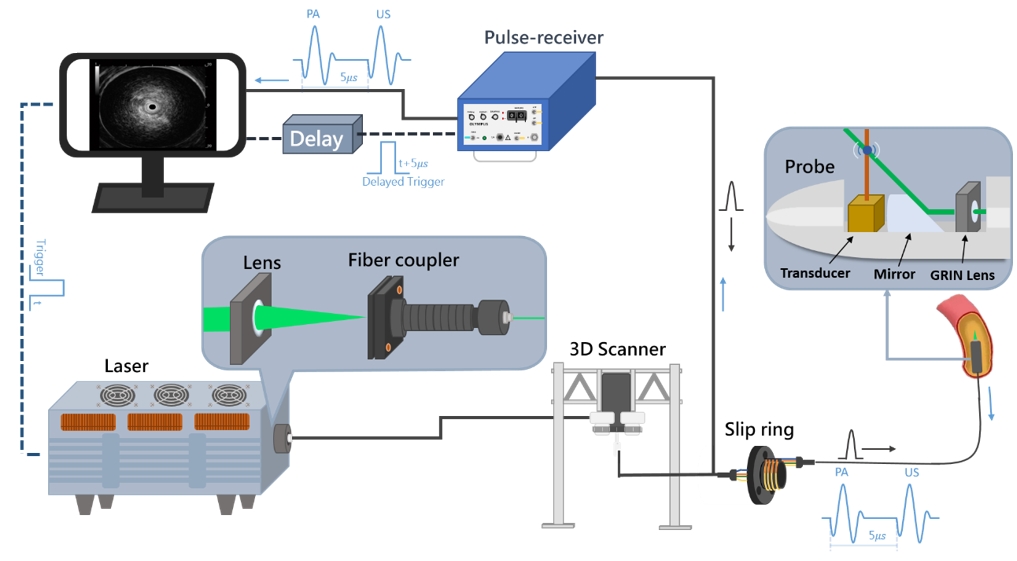

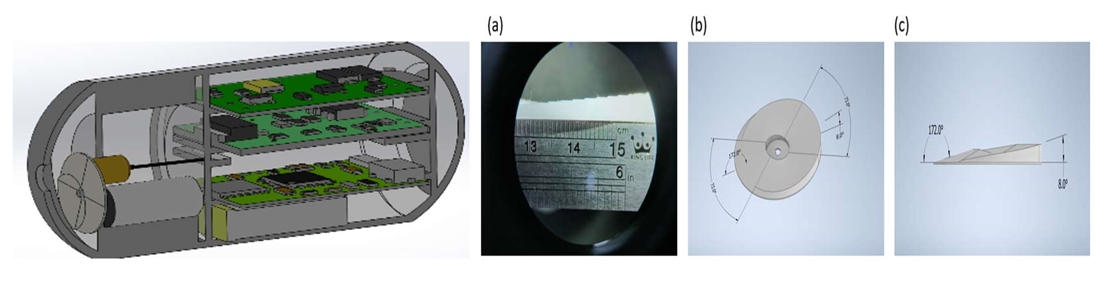

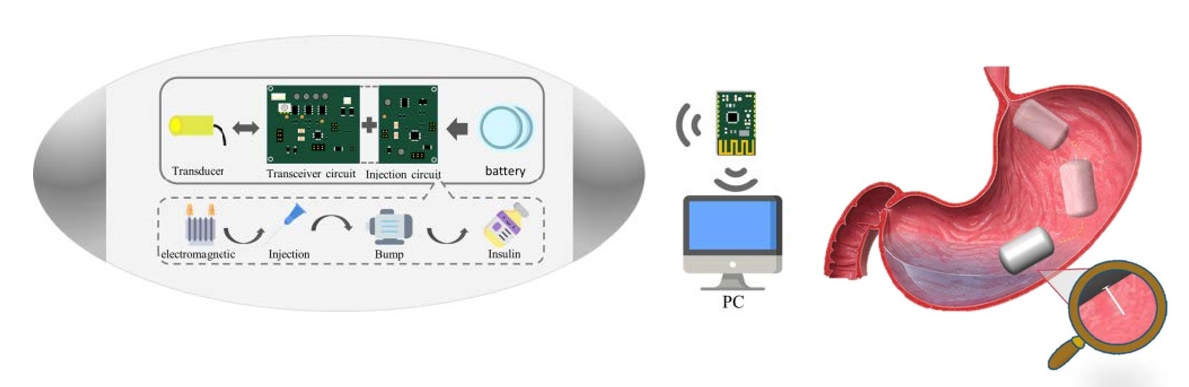

Most traditional wireless ultrasound endoscopic capsules (USEC) currently available on the market only offer imaging functions. Due to the development of ultrasound (US) assisted puncture technology, the design is aimed at injecting microcapsules in diabetic patients, using a Membrane liquid lens (MLL) to cooperate with US probes. The injection system is used to achieve intragastric insulin injection in the body, adjust the MLL observation and judge the injection position through US imaging, solve the risk of perforation and imaging problems, not only find the problem point and inject insulin accurately but also replace the power consumption problem of motors on the market.

Through the design of preclinical animal experiments, this design realizes the combination of in vivo positioning US observation and drug injection devices. Using MLL with a ultrasound probe, it can observe the returned US image and inject drugs. This method perfectly replaces the motor rotation and increases the operation time. The viewing angle can be greater than 45 degrees, which demonstrates the capability of the present system as a safe drug delivery device.